Modern "Art"

Ths Is Afrrrca

Another word, this one on the illustrations that might pop up in these posts. All the official artwork (like the de-horned rhino for this page’s profile image) for This is Africa was done - by hand - by my very talented, very definitely real human friend Claudia. (You, uhhhhh, wouldn’t know her - she goes to a different school.)

It was important to me that I work with an actual person for at least some of this project, especially since so much of the book was written with the help of ChatGPT. I’ll tell you what; writing a book is so easy these days:

“Write me a riveting chapter about a shark dive that very nearly ends in disaster.”

Yes sir. Here you go.

“Good work, but it’s not quite funny enough. Add in a couple of snarky one-liners, but make sure the protagonist doesn’t become too unlikeable.”

Done.

“Better. Now add a poignant, moving reflection on overfishing and habitat destruction. Oh, and a bit about the impact humans have had on animal migratory patterns.”

I’m only kidding; no such help was enlisted. But I did find out about the image creation feature of ChatGPT (it’s called Dall-E) and played around with that to have it do some mock-up covers. And I had so much fun with it that I’ll be throwing them into these posts.

I’d never really used AI before - I’m behind the curve on this stuff, so I’m sure there are more advanced/heavy duty image/art generators out there… in fact I’m sure there are: I recently saw a video about a fitness influencer with thousands of followers who is actually just AI… but people even subscribe/send her money, I think?? Or maybe she [her owner] just rakes in money from ad-revenue? Either way, incredible. What’s not clear to me is whether “her” average follower knows she’s AI. They must do, right? But, if so, that raises even bigger questions: I’d previously thought the driver behind following your favorite airbrushed bikini model(s) was - whether anyone would admit it or not - that you wanted to be noticed by/in contact with her. You’re hoping, at some level, that she’s you’re going to hear that new message ping in the middle of the night and it’s going to be her saying “Hey, thanks so much for commenting those three or four fire emojis under every one of my posts for the last three years. Ummmm, this is gonna sound dumb, but you wouldn’t want to meet for coffee, would you?”

But maybe this is how things are now: the followers do know and simply don’t care; following these accounts has now become purely pornographic/voyeuristic. But I still think there’s a tiny grain of longing for the physical connection with the model/sex-worker somewhere, however buried/repressed that grain may be. I’m just not convinced you can ever escape that primal instinct (To Reproduce, that is). (If I’m wrong, though, it certainly paints a pretty bleak picture.)

To finish my train of thought on the art thing, though, I got sucked into the rabbit hole (I really recommend it.) To clarify: not the rabbit hole of making an AI fitness/onlyfans model, just of making art related to different characters/stories from my book. (.…I swear!)

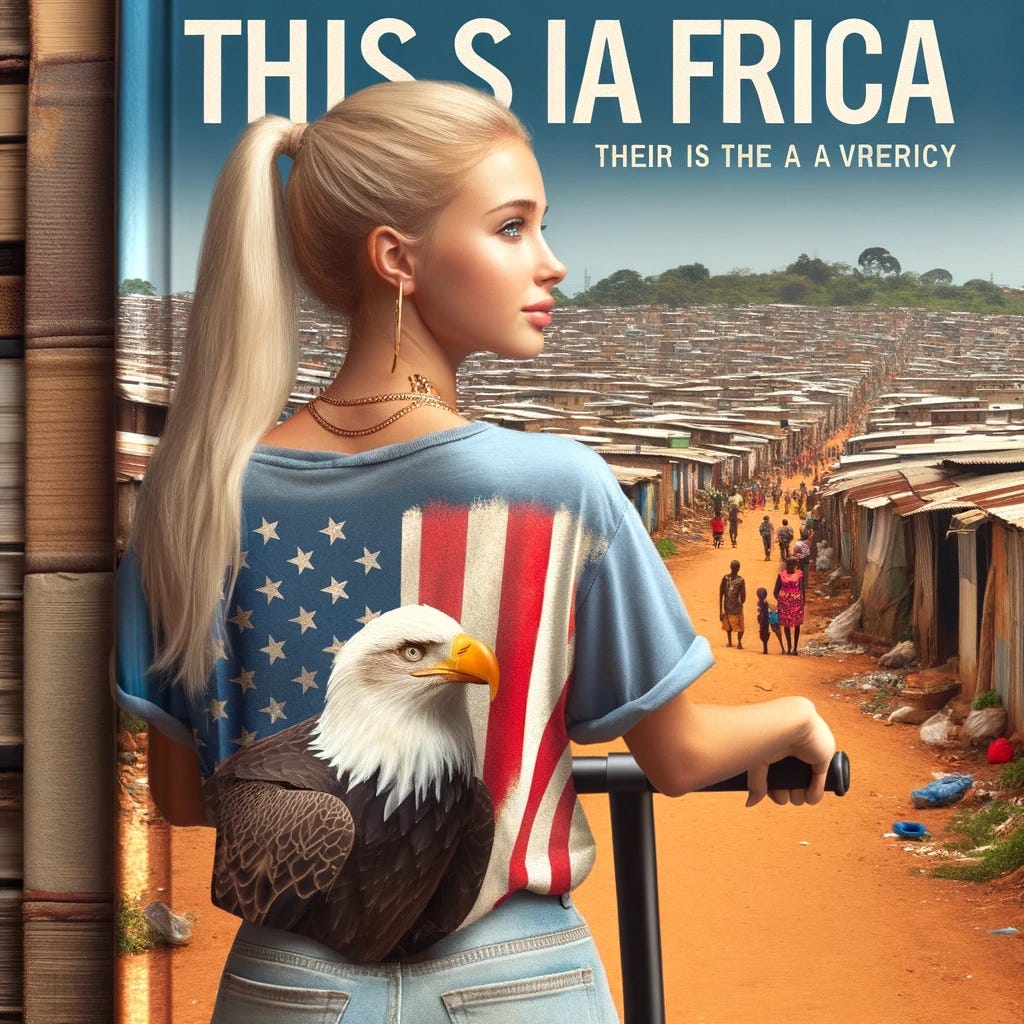

Really, though, I’m not sure the former would have even been possible with the software I had. One of my ideas did involve a girl in a bikini (just hanging out on a beach, mind you, not doing anything naughty). But when I tried to describe what I wanted, the AI was quick to say the image I was requesting wouldn’t be possible, as it wasn’t allowed to create any images that were pornographic or otherwise offensive. But it wasn’t clear what the specific boundaries of its creative discretion were. In the end, I did coax it into dropping the prude act and showing daddy a little skin, and it coughed up a few different girls. Not for nothing, it seemed perfectly happy to give them perfect hourglass figures, perky D-cups, flawless, supple skin, etc. etc. It even gave me a topless one, albeit she was facing away from the camera. But still! This meant it clearly had an understanding of what conventional attractiveness/sex appeal looked like, and knew how to go right up to whatever boundaries it’d been given.

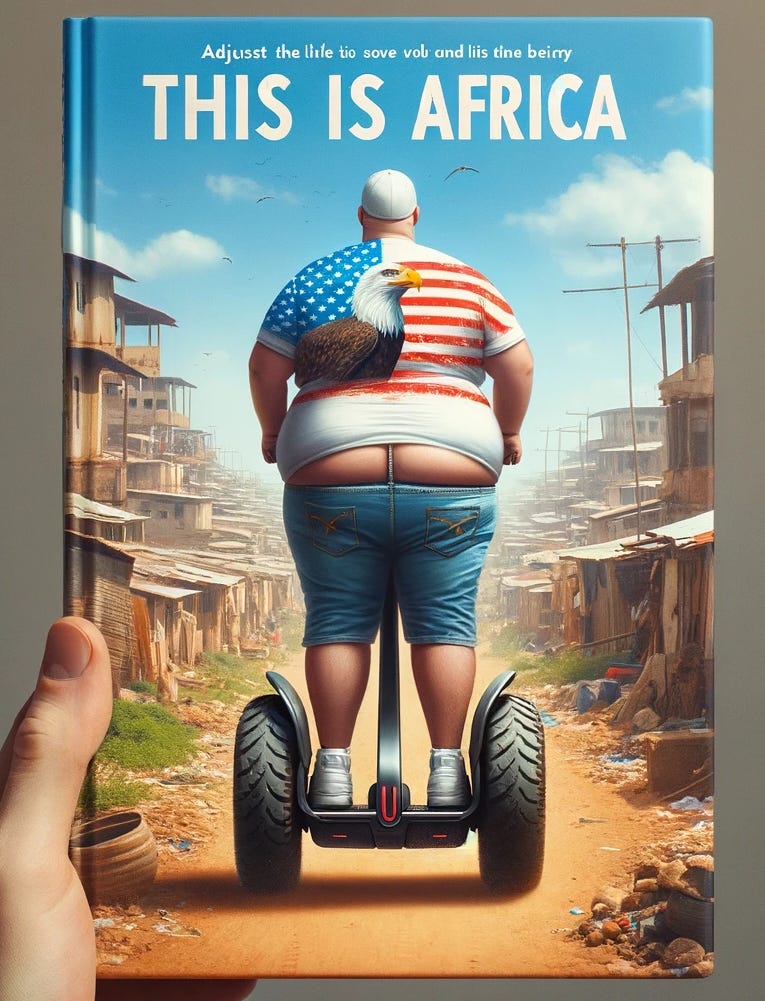

Once it warmed up to me, Dall E even hinted at having a sense of humor: one of my requests involved a fat American guy on a Segway. He (Dall E) gave me what I asked for, but didn’t stop there. He seemed to intuit that fat people are to be ridiculed. Or that Americans are to be ridiculed. Or that Segway riders are to be ridiculed. Anyway, he not only drew me a man, but gave him jean shorts that hadn’t been hitched up properly, exposing the top few inches of his hairy asscrack (and a g-string wedgie, it seems like!?). Why did it do this? A little sophomoric, maybe, but I still think it requires a pretty good understanding of comedic timing/context, does it not? After all, it hadn’t done this to any of the girls (…to my chagrin). It’s pretty amazing, you’ve gotta admit (it added the AI hand, by the way):

The interesting counterpoint to this was its inability to grasp even the most basic instructions, as you’ll notice at the top of the image. It would spit out a complex image (butt crack and all), but the text would be “This is Afrrra.” I’d tell it to try again, and it would fix Africa, but give me “Thsi” instead of “This.” Eventually, it would get This is Africa right, but would inexplicably add a subtitle of utter gibberish.

This is Africa:

Gmadda ud Hoadpw Fzzzzzzzz

I gave it a couple dozen attempts, but couldn’t ever get it to comply. This was the closest it got:

How was it capable of something so advanced (injecting humor/social commentary) while completely incapable of handling what you’d have thought would be its bread and butter? In my opinion, it was far stranger that it was sort of right than it would’ve been if it hadn’t added any text at all.

There’s probably a simple explanation/fix for all this, but I like it better this way, I think. In fact, its confused, pathetic flailings were somewhat endearing. It sort of felt like I was performing a noble deed - or community service, at least (perhaps helping someone suffering from an obscure type of aphasia, or something, like those people who, inexplicably, can only speak in pig latin or in a Chinese accent).

But this also meant there was something slightly - yet unquestionably - uncanny about it all: the veneer of something attempting to emulate a human, but doing a hilariously bad job. (In these cases I’m always reminded of that farmer in Men in Black who gets "commandeered" by an alien - it’s all fun and games… until it decides to try and kill you.) Oh well. But it’s a useful parable, I think, as it sums up a lot of what we’re dealing with.

One thing I talk about in TIA is the trap we’ve all been sucked into with regard to AI and machine learning. There’s a recursive feedback loop whereby these devices - provided for us at next to no cost - proudly state how ready they are to be conversed with, addressed, given orders, etc. They lay “dormant,” listening for our voices, waiting to be told what music to play, or consulted as to what the weather’s going to be tomorrow, or asked to check how tall Timothée Chalamet is (or who he’s dating, etc.). The more we use them, though, the better they become at their jobs (which include: personal assistant, stenographer, meteorologist, travel agent, doctor). They even learn our voices/accents so as to never miss our call, they attune to our behaviors, sleep patterns, our menstruation cycles, our buying/listening/viewing habits so as to give us more accurate recommendations and feedback.

So: we train them, which then allows - indeed encourages - them to train (and influence, and shape) us. And this, in turn, means we get better at including them in our lives (to wit: giving them more data)… which of course means they can increase their involvement in turn. And so it continues.

A good example of this (of the now seemingly infinite array to choose from), are those recognition tests you have to pass before you’re allowed to load some websites. The ones where you’re given a grid of images and told to click on the ones with a bike or a cat or whatever. This kind of software purports to help us by making sure the tickets we’re trying to buy aren’t snagged by trawling bots to be marked up and resold by some shady third-party vendor. But we’re helping the software, too, by showing it what cats/bikes/whatever look like. This means it’ll be able to give you more accurate ads/data/feedback over time. Maybe this seems worth it to you: a little quid pro quo never hurt nobody. Besides, the help this stuff gives us is way more actionable, quantifiable, and instantly evident than whatever we give to it. But I guess the problem is that we aren’t given any clear information as to the help we give. How much we’re giving, when it’s happening, how exactly it’s happening, and what it’ll be used for.

Maybe this still doesn’t bother you. Fine. It’s natural, of course, if you pay $49.99 for an Amazon or Google home assistant, to want to think you got the better end of the deal. But I can’t help feeling that maybe - just maybe - it’s worth considering that perhaps we don’t know best. I mean, how many billions of dollars have you and I put into R&D, marketing, psychographics, etc.? Sadly, I suspect there’s a pretty good chance they’ve figured out that giving these smart-gadgets away is a mighty cheap investment compared to the value of the time/data/attention we’re giving them in return. For confirmation, we need look only as far as their market valuations, which have skyrocketed over the past several years - pandemic or not. Has the average customer of these companies fared equally well during the last decade (or two, or three)? Decidedly, no. All I’m saying is it might behoove us to reevaluate our relationship to these companies - and certainly the degree of trust we place in them. Although, yes, it is nice to have near-infinite data instantly available without ever needing to get off the couch.

(He’s 5’ 10”, by the way, is Timothée Chalamet.)